The compelling advantage of VoIP is that it is far cheaper than circuit switched technology. But VoIP calls often sound horrible. It doesn’t have to be this way. Although VoIP is intrinsically prone to jitter, delay and packet loss, good system design can mitigate all these impairments. The simplest solution is over-provisioning bandwidth.

The lowest bandwidth leg of a VoIP call, where the danger of delayed or lost packets is the greatest, is usually the ‘last mile’ WAN connection from the ISP to the customer premises. This is also where bandwidth is most expensive.

On this last leg, you tend to get what you pay for. Cheap connections are unreliable. Since businesses live or die with their phone service, they are motivated to pay top dollar for a Service Level Agreement specifying “five nines” reliability. But there’s more than one way to skin a cat. Modern network architectures achieve high levels of reliability through redundant low-cost, less reliable systems. For example, to achieve 99.999% aggregate reliability, you could combine two independent systems (two ISPs) each with 99.7% reliability, three each with 97.8% reliability, or four each with 94% reliability. In other words, if your goal is 5 minutes or less of system down-time per year, with two ISPs you could tolerate 4 minutes of down-time per ISP per day. With 3 ISPs, you could tolerate 30 minutes of down-time per ISP per day.

Here’s a guest post from Dr. Cahit Jay Akin of Mushroom Networks, describing how to do this:

Clearing the Cloud for Reliable, Crystal-Clear VoIP Services

More companies are interested in cloud-based VoIP services, but concerns about performance hold them back. Now there are technologies that can help.

There’s no question that hosted, cloud-based Voice over IP (VoIP) and IP-PBX technologies are gaining traction, largely because they reduce costs for equipment, lines, manpower, and maintenance. But there are stumbling blocks – namely around reliability, quality and weak or non-existent failover capabilities – that are keeping businesses from fully committing.

Fortunately, there are new and emerging technologies that can optimize performance without the need for costly upgrades to premium Internet services. These technologies also protect VoIP services from jitter, latency caused by slow network links, and other common unpredictable behaviors of IP networks that impact VoIP performance. For example, Broadband Bonding, a technique that bonds various Internet lines into a single connection, boosts connectivity speeds and improves management of the latency within an IP tunnel. Using such multiple links, advanced algorithms can closely monitor WAN links and make intelligent decisions about each packet of traffic to ensure nothing is ever late or lost during communication.

VoIP Gains Market Share

The global VoIP services market, including residential and business VoIP services, totaled $63 billion in 2012, up 9% from 2011, according to market research firm Infonetics. Infonetics predicts that the combined business and residential VoIP services market will grow to $82.7 billion in 2017. While the residential segment makes up the majority of VoIP services revenue, the fastest-growing segment is hosted VoIP and Unified Communications (UC) services for businesses. Managed IP-PBX services, which focus on dedicated enterprise systems, remain the largest business VoIP services segment.

According to Harbor Ridge Capital LLC, which did an overview of trends and mergers & acquisitions activity of the VoIP market in early 2012, there are a number of reasons for VoIP’s growth. Among them: the reduction in capital investments and the flexibility hosted VoIP provides, enabling businesses to scale up or down their VoIP services as needed. Harbor Ridge also points out a number of challenges, among them the need to improve the quality of service and meet customer expectations for reliability and ease of use.

But VolP Isn’t Always Reliable

No business can really afford a dropped call or a garbled message left in voicemail. But these mishaps do occur when using pure hosted VoIP services, largely because they are reliant on the performance of the IP tunnel through which the communications must travel. IP tunnels are inevitably congested and routing is unpredictable, two factors that contribute to jitter, delay and lost packets, which degrade the quality of the call. Of course, if an IP link goes down, the call is dropped.

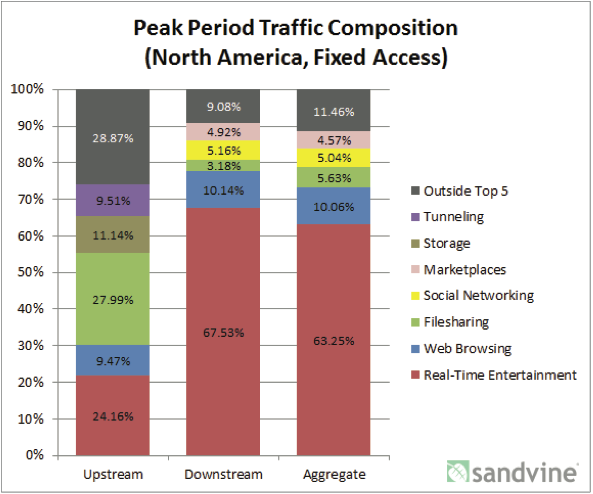

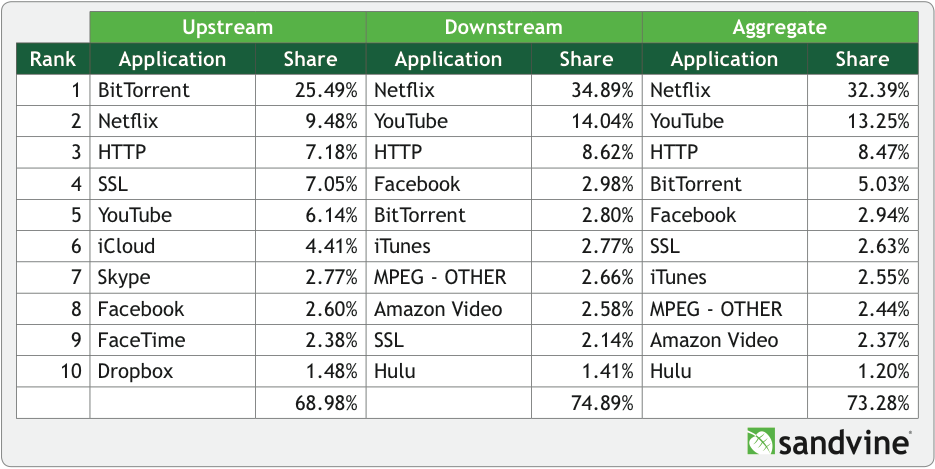

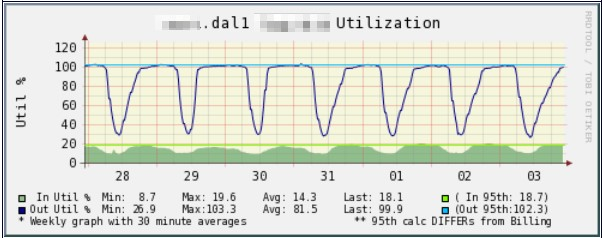

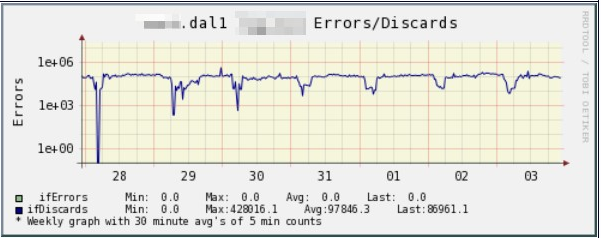

Hosted, cloud-based VoIP services offer little in the way of traffic prioritization, so data and voice fight it out for Internet bandwidth. And there’s little monitoring available. IP-PBX servers placed in data centers or at the company’s headquarters can help by providing some protection over pure hosted VoIP services. They offer multiple WAN interfaces that let businesses add additional, albeit costly, links to serve as backups if one fails. Businesses can also take advantage of the various functions that an IP-PBX system offers, such as unlimited extensions and voice mail boxes, caller ID customizing, conferencing, interactive voice response and more. But IP-PBXes are still reliant on the WAN performance and offer limited monitoring features. Thus, users and system administrators might not even know about an outage until they can’t make or receive calls. Some hosted VoIP services include a hosted IP-PBX, which typically include back-up and storage and failover functions, as well as limited monitoring.

Boosting Performance through Bonding and Armor

Mushroom Networks has developed several technologies designed to improve the performance, reliability and intelligence of a range of Internet connection applications, including VoIP services. The San Diego, Calif., company’s WAN virtualization solution leverages virtual leased lines (VLLs) and its patented Broadband Bonding, a technique that melds various numbers of Internet lines into a single connection. WAN virtualization is a software-based technology that uncouples operating systems and applications from the physical hardware, so infrastructure can be consolidated and application and communications resources can be pooled within virtualized environments. WAN virtualization adds intelligence and management so network managers can dynamically build a simpler, higher-performing IP pipe out of real WAN resources, including existing private WANs and various Internet WAN links like DSL, cable, fiber, wireless and others. The solution is delivered via the Truffle appliance, a packet level load balancing router with WAN aggregation and Internet failover technology.

Using patented Broadband Bonding techniques, Truffle bonds various numbers of Internet lines into a single connection to ensure voice applications are clear, consistent and redundant. This provides faster connectivity via the sum of all the line speeds as well as intelligent management of the latency within the tunnel. Broadband Bonding is a cost effective solution for even global firms that have hundreds of branch offices scattered around the world because it can be used with existing infrastructures, enabling disparate offices to have the same level of connectivity as the headquarters without the outlay of too much capital. The end result is a faster connection with multiple built-in redundancies that can automatically shield negative network events and outages from the applications such as VoIP. Broadband Bonding also combines the best attributes of the various connections, boosting speeds and reliability.

Mushroom Networks’ newest technology, Application Armor, shields VoIP services from the negative effects of IP jitter, latency, packet drops, link disconnects and other issues. This technology relies on a research field known as Network Calculus, that models and optimizes communication resources. Through decision algorithms, Application Armor monitors traffic and refines routing in the aggregated, bonded pipe by enforcing application-specific goals, whether it’s throughput or reduced latency.

VoIP at Broker Houlihan Lawrence – Big Savings and Performance

New York area broker Houlihan Lawrence – the nation’s 15th largest independent realtor – has cut its telecommunications bill by nearly 75 percent by deploying Mushroom Networks’ Truffle appliances in its branch offices. The agency began using Truffle shortly after Superstorm Sandy took out the company’s slow and costly MPLS communications network when it landed ashore near Atlantic City, New Jersey last year. After the initial deployment to support mission-critical data applications including customer relationship management and email, Houlihan Lawrence deployed a state-of-the-art VOIP system and runs voice communications through Mushroom Networks’ solution. The ability to diversify connections across multiple providers and multiple paths assures automated failover in the event a connection goes down, and the Application Armor protects each packet, whether it’s carrying voice or data, to ensure quality and performance are unfailing and crystal clear.

Hosted, cloud-based Voice over IP (VoIP) and IP-PBX technologies help companies like Houlihan Lawrence dramatically reduce costs for equipment, lines, manpower, and maintenance. But those savings are far from ideal if they come without reliability, quality and failover capabilities. New technologies, including Mushroom Networks’ Broadband Bonding and Application Armor, can optimize IP performance, boost connectivity speeds, improve monitoring and shield VoIP services from jitter, latency, packet loss, link loss and other unwanted behaviors that degrade performance.

Dr. Cahit Jay Akin is the co-founder and chief executive officer of Mushroom Networks, a privately held company based in San Diego, CA, providing broadband products and solutions for a range of Internet applications.