I have some deep seated opinions about user interfaces and usability. It normally only takes me a few seconds to get irritated by a new application or device, since they almost always contravene one or more of my fundamental precepts of usability. So when I see a product that gets it righter than I could have done myself, I have to say it warms my heart.

I just noticed a few minutes ago, using Chrome, that the tabs behave in a better way than on any other browser that I have checked (Safari, Firefox, IE8). If you have a lot of tabs open, and you click on an X to close one of them, the tabs rearrange themselves so that the X of the next tab is right under the mouse, ready to get clicked to close that one too. Then after closing all the tabs that you are no longer interested in, when you click on a remaining one, the tabs rearrange themselves to a right size. This is a very subtle user interface feature. Chrome has another that is a monster, not subtle at all, and so nice that only stubborn sour grapes (or maybe patents) stop the others from emulating it. That is the single input field for URLs and searches. I’m going to talk about how that fits with my ideas about user interface design in just a moment, but first let’s go back to the tab sizing on closing with the mouse.

I like this feature because it took a programmer some effort to get it right, yet it only saves a user a fraction of a second each time it is used, and only some users close tabs with the mouse (I normally use Cmd-W), and only some users open large numbers of tabs simultaneously. So why did the programmer take the trouble? There are at least two good reasons: first, let’s suppose that 100 million people use the Chrome browser, and that they each use the mouse to close 12 tabs a day, and that in 3 of these closings, this feature saved the user from moving the mouse, and the time saved for each of these three mouse movements was a third of a second. The aggregate time saved per day across 100 million users is 100 million seconds. At 2,000 working hours per year, that’s more than 10 work-years saved per day. The altruistic programmer sacrificed an hour or a day or whatever of his valuable time, to give the world far more. But does anybody apart from me notice? As I have remarked before, at some level the answer is yes.

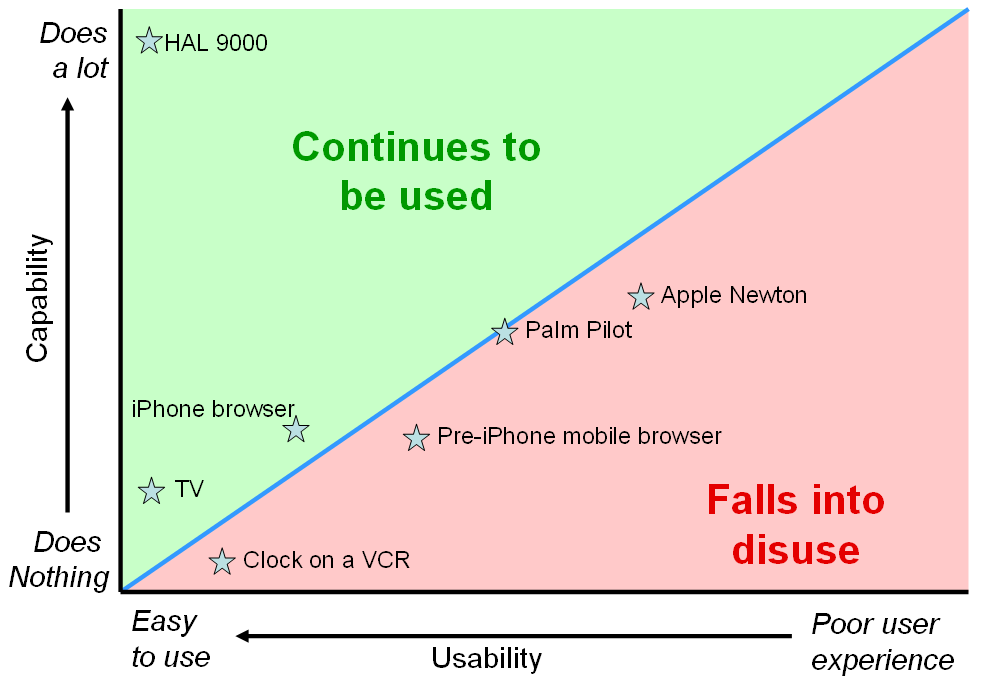

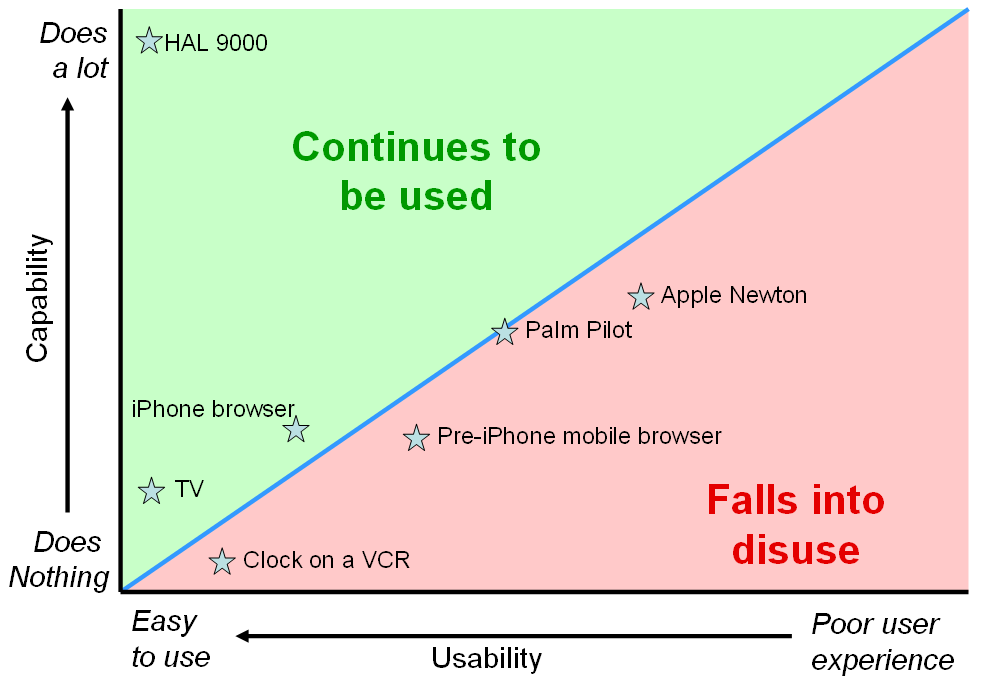

The second reason it was a good idea for the programmer to take this trouble is to do with the nature of usability and choice of products. There is plenty of competition in the browser market, and it is trivial for a user to switch browsers. Usability of a program is an accretion of lots of little ingredients. So in the solution space addressed by a particular application, the potential gradation of usability is very fine-grained, each tiny design decision moving the needle a tiny increment in the direction of greater or lesser usability. But although ease of use of an application is an infinitely variable property, whether a product is actually used or not is effectively a binary property. It is a very unusual consumer (guilty!) who continues to use multiple browsers on a daily basis. Even if you start out that way you will eventually fall into the habit of using just one. For each user of a product, there is a threshold on that infinite gradation of usability, that balances against the benefit of using the product. If the product falls below that effort/benefit threshold it gradually falls into disuse. Above that threshold the user forms the habit of using it regularly. Many years ago I bought a Palm Pilot. For me, that user interface was right on my threshold. It teetered there for several weeks as I tried to get into the habit of depending on it, but after I missed a couple of important appointments because I had neglected to put them into the device, I went back to my trusty pocket Day-Timer. For other people, the Palm Pilot was above their threshold of usability, and they loved it, used it and depended on it. Not all products are so close to the threshold of usability. Some fall way below it. You have never heard of them – or maybe you have: how about the Apple Newton? And some land way above it; before the iPhone nobody browsed the Internet on their phones – the experience was too painful. In one leap the iPhone landed so far above that threshold that it routed the entire industry.

The point here is that the ‘actual use’ threshold is a a razor-thin line on the smooth scale of usability, so if a product lies close to that line, the tiniest, most subtle change to usability can move it from one side of the line to the other. And in a competitive market where the cost of switching is low, that line isn’t static; the competition is continuously moving the threshold up. This is consistent with “natural selection by variation and survival of the fittest.” So product managers who believe their usability is “good enough,” and that they need to focus on new features to beat the competition are often misplacing their efforts – they may be moving their product further to the right on the diagram above than they are moving it up.

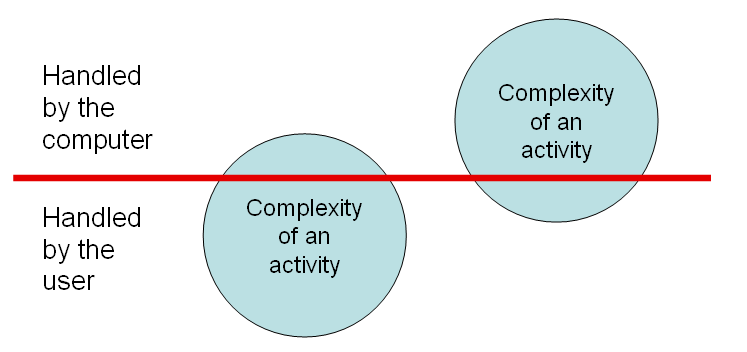

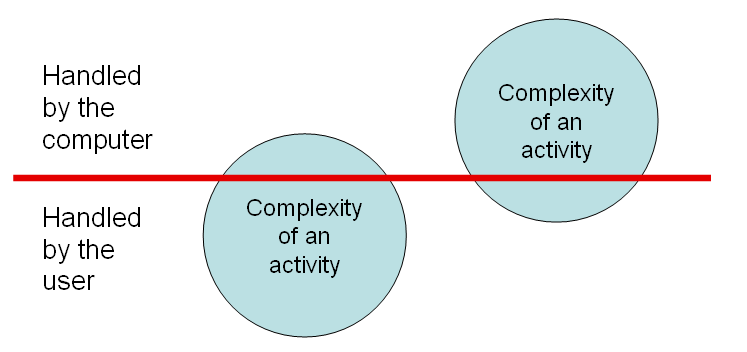

Now let’s go on to Chrome’s single field for URLs and searches. Computer applications address complicated problem spaces. In the diagram below, each circle represents the aggregate complexity of an activity performed with the help of a computer. The horizontal red line represents the division between the complexity handled by the user, and that handled by the computer. In the left circle most of the complexity is dealt with by the user, in the right circle most is dealt with by the computer. For a given problem space, an application will fall somewhere on this line. For searching databases HAL 9000 has the circle almost entirely above this line, SQL is way further down. The classic example of this is the graphical user interface. It is vastly more programming work to create a GUI system like Windows than a command-line system like MS-DOS, and a GUI is correspondingly vastly easier on the user.

Its single field for typing queries and URLs clearly makes Chrome sit higher on this line than the browsers that use two fields. With Chrome the user has less work to do: he just gives the browser an instruction. With the others the user has to both give the instruction and tell the computer what kind of instruction it is. On the other hand, the programmer has to do more work, because he has to write code to determine whether the user is typing a URL or a search. But this is always going to be the case when you make a task of a given complexity easier on the user. In order to relieve the user, the computer has to handle more complexity. That means more work for the programmer. Hard-to-use applications are the result of lazy programmers.

The programming required to implement the single field for URLs and searches is actually trivial. All browsers have code to try to form a URL out of what’s typed into the address field; the programmer just has to assume it’s a search when that code can’t generate a URL. So now, having checked my four browsers, I have to partially eat my words. Both Firefox and IE8, even though they have separate fields for web addresses and searches, do exactly what I just said: address field input that can’t be made into a URL is treated as a search query. Safari, on the other hand, falls into the lazy programmer hall of shame.

This may be a result of a common “ease of use” fallacy: that what is easier for the programmer to conceive is easier for the user to use. The programmer has to imagine the entire solution space, while the user only has to deal with what he comes across. I can imagine a Safari programmer saying “We have to meet user expectations consistently – it will be confusing if the address field behaves in an unexpected way by doing a search when the user was simply mistyping a URL.” The fallacy of this argument is that while the premise is true (“it is confusing to behave in an unexpected way,”) you can safely assume that an error message is always unexpected, so rather than deliver one of those, the kind programmer will look at what is provoking the error message, and try to guess what the user might have been trying to achieve, and deliver that instead.

There are two classes of user mistake here: one is typing into the “wrong” field, the other is mistyping a URL. On all these browsers, if you mistype a URL you get an unwanted result. On Safari it’s an error page, on the others it’s an error page or a search, depending on what you typed. So Safari isn’t better, it just responds differently to your mistake. But if you make the other kind of “mistake,” typing a search into the “wrong” field, Safari gives an error, while the others give you what you actually wanted. So in this respect, they are twice as good, because the computer has gracefully relieved the user of some work by figuring out what they really wanted. But Chrome goes one step further, making it impossible to type into the “wrong” field, because there is only one field. That’s a better design in my opinion, though I’m open to changing my mind: the designers at Firefox and Microsoft may argue that they are giving the best of both worlds, since users accustomed to separate fields for search and addresses might be confused if they can’t find a separate search field.