Service providers can offer any product they wish. But consumers have certain expectations when a product is described as ‘Internet Service.’ So net neutrality regulations are similar to truth in advertising rules. The primary expectation that users have of an Internet Service Provider (ISP) is that it will deliver IP datagrams (packets) without snooping inside them and slowing them down, dropping them, or charging more for them based on what they contain.

The analogy with the postal service is obvious, and the expectation is similar. When Holland passed a net neutrality law last week, one of the bill’s co-authors, Labor MP Martijn van Dam, compared Dutch ISP KPN to “a postal worker who delivers a letter, looks to see what’s in it, and then claims he hasn’t read it.” This snooping was apparently what set off the furor that led to the legislation:

“At a presentation to investors in London on May 10, analysts questioned where KPN had obtained the rapid adoption figures for WhatsApp. A midlevel KPN executive explained that the operator had deployed analytical software which uses a technology called deep packet inspection to scrutinize the communication habits of individual users. The disclosure, widely reported in the Dutch news media, set off an uproar that fueled the legislative drive, which in less than two months culminated in lawmakers adopting the Continent’s first net neutrality measures with real teeth. New York Times

Taking the analogy with the postal service a little further: the postal service charges by volume. The ISP industry behaves similarly, with tiered rates depending on bandwidth. Net neutrality advocates don’t object to this.

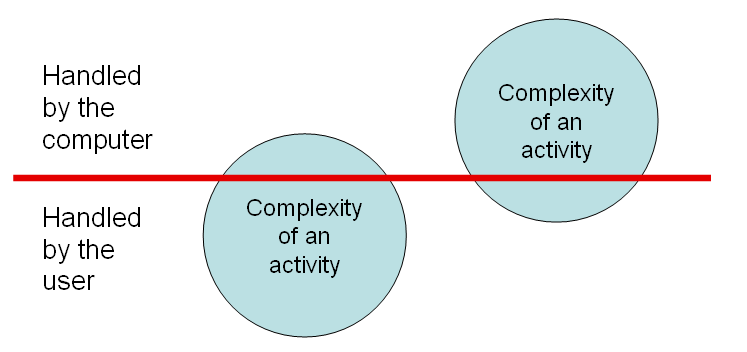

The postal service also charges by quality of service, like delivery within a certain time, and guaranteed delivery. ISPs don’t offer this service to consumers, though it is one that subscribers would probably pay for if applied voluntarily and transparently. For example, suppose I wish to subscribe to 10 megabits per second of Internet connectivity, I might be willing to pay a premium for a guaranteed minimum delay on UDP packets. The ISP could then add value for me by prioritizing UDP packets over TCP when my bandwidth demand exceeded 10 megabits per second. Is looking at the protocol header snooping inside the packets? Kind of, because the TCP or UDP header is inside the IP packet, but on the other hand, it might be like looking at a piece of mail to see if it is marked Priority or bulk rate.

A subscriber may even be interested in paying an ISP for services based on deep packet inspection. In a recent conversation, an executive at a major wireless carrier likened net neutrality to pollution. I am not sure what he meant by this, but he may have been thinking of spam-like traffic that nobody wants, but that neutrality regulations might force a service provider to carry. I use Gmail as my email service, and I am grateful for the Gmail spam filter, which works quite well. If a service provider were to use deep packet inspection to implement malicious-site blocking (like phishing site blocking or unintentional download blocking) or parental controls, I would consider this a service worth paying for, since the PC-based capabilities in this category are too easily circumvented by inexperienced users.

Notice that all these suggestions are for voluntary services. When a company opts to impose a product on a customer when the customer prefers an alternative one, the customer is justifiably irked.

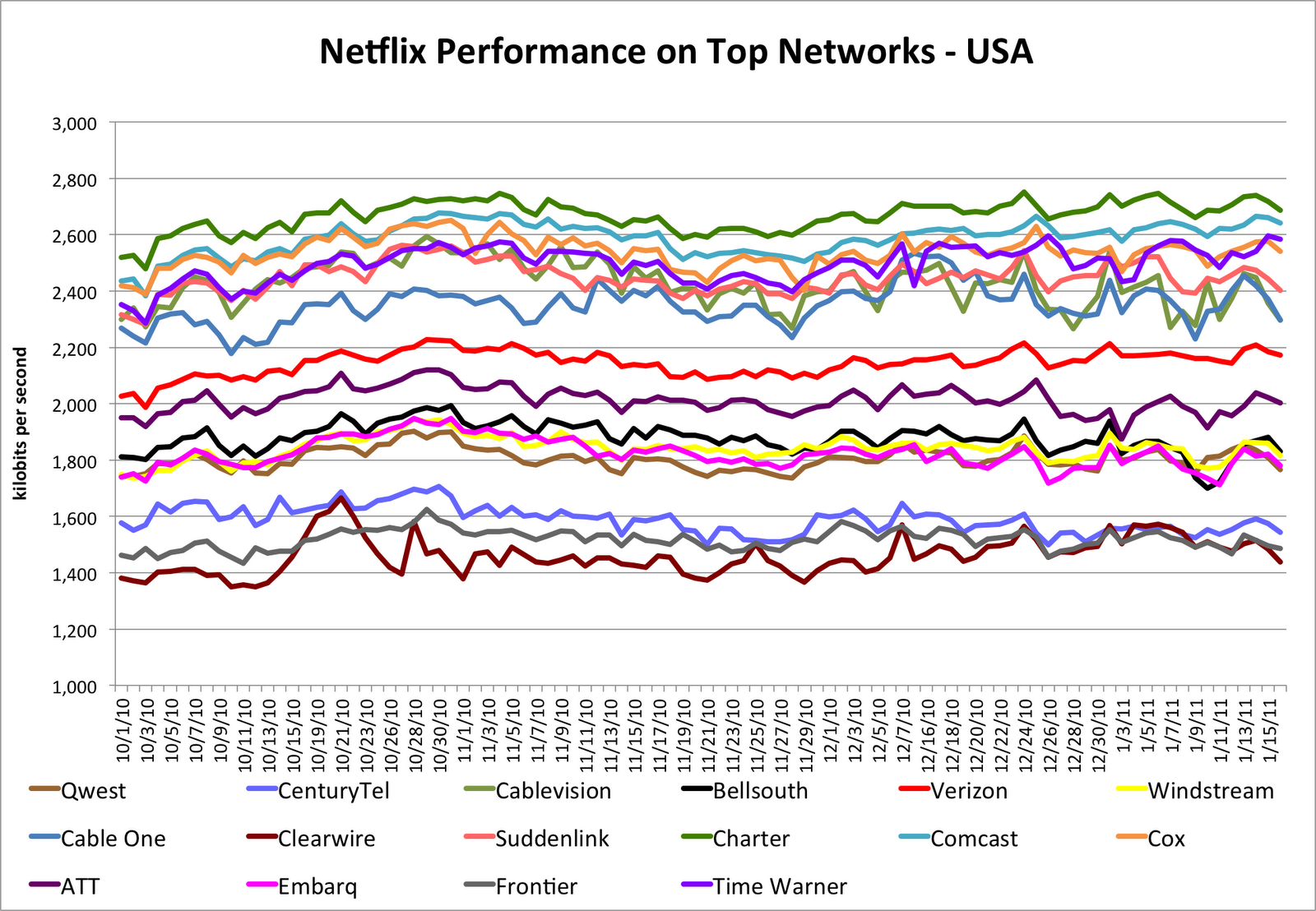

What provoked KPN to start blocking WhatsApp, was that KPN subscribers were abandoning KPN’s SMS service in favor of WhatsApp. This caused a revenue drop. Similarly, as VoIP services like Skype grow, voice revenues for service providers will drop, and service providers will be motivated to block or impair the performance of those competing services.

The dumb-pipe nature of IP has enabled the explosion of innovation in services and products that we see on the Internet. Unfortunately for the big telcos and cable companies, many of these innovations disrupt their other service offerings. Internet technology enables third parties to compete with legacy cash cows like voice, SMS and TV. The ISP’s rational response is to do whatever is in its power to protect those cash cows. Without network neutrality regulations, the ISPs are duty-bound to their investors to protect the profitability of their other product lines by blocking the competitors on their Internet service, just as KPN did. Net neutrality regulation is designed to prevent such anti-competitive behavior. A neutral net obliges ISPs to allow competition on their access links.

So which is the free-market approach? Allowing network owners to do whatever they want on their networks and block any traffic they don’t like, or ensuring that the Internet is a level playing field where entities with the power to block third parties are prevented from doing so? The former is the free market of commerce, the latter is the free market of ideas. In this case they are in opposition to each other.