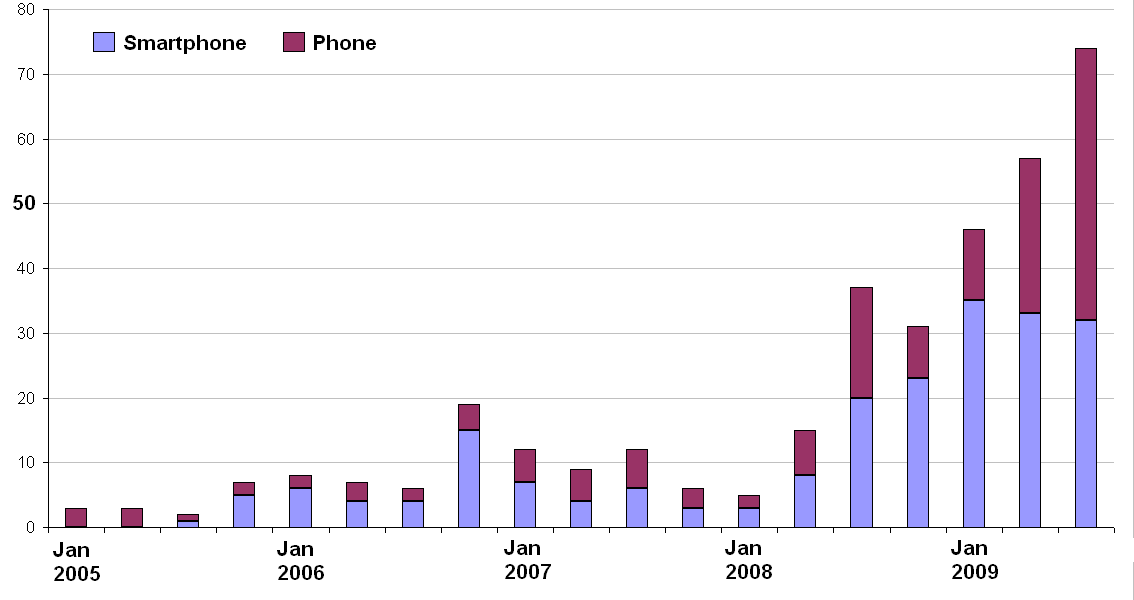

This piece from the Aberdeen Group shows accelerometers and gyroscopes becoming universal in smartphones by 2018.

Accelerometers were exotic in smartphones when the first iPhone came out – used mainly for sensing the orientation of the phone for displaying portrait or landscape mode. Then came the idea of using them for dead-reckoning-assist in location-sensing. iPhones have always had accelerometers; since all current smartphones are basically copies of the original iPhone, it is actually odd that some smartphones lack accelerometers.

Predictably, when supplied with a hardware feature, the app developer community came up with a ton of creative uses for the accelerometer: magic tricks, pedometers, air-mice, and even user authentication based on waving the phone around.

Not all sensor technologies are so fertile. For example the proximity sensor is still pretty much only used to dim the screen and disable the touch sensing when you hold the phone to your ear or put it in your pocket.

So what about the user-facing camera? Is it a one-trick pony like the proximity sensor, or a springboard to innovation like the accelerometer? Although videophoning has been a perennial bust, I would argue for the latter: the you-facing camera is pregnant with possibilities as a sensor.

Looking at the Aberdeen report, I was curious to see “gesture recognition” on a list of features that will appear on 60% of phones by 2018. The others on the list are hardware features, but once you have a camera, gesture recognition is just a matter of software. (The Kinect is a sidetrack to this, provoked by lack of compute power.)

In a phone, compute power means battery-drain, so that’s a limitation to using the camera as a sensor. But each generation of chips becomes more power-efficient as well as more powerful, and as phone makers add more and more GPU cores, the developer community delivers new useful uses for them that max them out.

Gesture recognition is already here with Samsung, and soon every Android device. The industry is gearing up for innovation in phone based computer vision with OpenVX from Khronos. When always-on computer vision becomes feasible from a power-drain point of view, gesture recognition and face tracking will look like baby-steps. Smart developers will come up with killer applications that are currently unimaginable. For example, how about a library implementation of Paul Ekman’s emotion recognition algorithms to let you know how you are really feeling right now? Or, in concert with Google Glass, so you will never again be oblivious to your spouse’s emotional temperature.

Update November 19th: Here‘s some news and a little bit of technology background on this topic…

Update November 22:It looks like a company is already engaged on the emotion-recognition technology.