When the iPhone came out it redefined what a smartphone is. The others scrambled to catch up, and now with Android they pretty much have. The iPhone 4 is not in a different league from its competitors the way the original iPhone was. So I have been trying to decide between the iPhone 4 and the EVO for a while. I didn’t look at the Droid X or the Samsung Galaxy S, either of which may be better in some ways than the EVO.

Each hardware and software has stronger and weaker points. The Apple wins on the subtle user interface ingredients that add up to delight. It is a more polished user experience. Lots of little things. For example I was looking at the clock applications. The Apple stopwatch has a lap feature and the Android doesn’t. I use the timer a lot; the Android timer copied the Apple look and feel almost exactly, but a little worse. It added a seconds display, which is good, but the spin-wheel to set the timer doesn’t wrap. To get from 59 seconds to 0 seconds you have to spin the display all the way back through. The whole idea of a clock is that it wraps, so this indicates that the Android clock programmer didn’t really understand time. Plus when the timer is actually running, the Android cutely just animates the time-set display, while the Apple timer clears the screen and shows a count-down. This is debatable, but I think the Apple way is better. The countdown display is less cluttered, more readable, and more clearly in a “timer running” state. The Android clock has a wonderful “desk clock” mode, which the iPhone lacks, I was delighted with the idea, especially the night mode which dims the screen and lets you use it as a bedside clock. Unfortunately when I came to actually use it the hardware let the software down. Even in night mode the screen is uncomfortably bright, so I had to turn the phone face down on the bedside table.

The EVO wins on screen size. Its 4.3 inch screen is way better than the iPhone’s 3.5 inch screen. The “retina” definition on the iPhone may look like a better specification but the difference in image quality is indistinguishable to my eye, and the greater size of the EVO screen is a compelling advantage.

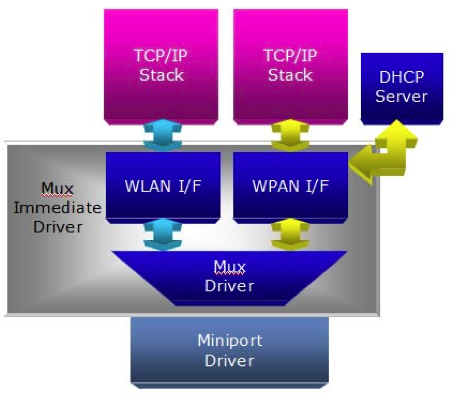

The iPhone has far more apps, but there are some good ones on the Android that are missing on the iPhone, for example the amazing Wi-Fi Analyzer. On the other hand, this is also an example of the immaturity of the Android platform, since there is a bug in Android’s Wi-Fi support that makes the Wi-Fi Analyzer report out-of-date results. Other nice Android features are the voice search feature and the universal “back” button. Of course you can get the same voice search with the iPhone Google app, but the iPhone lacks a universal “back” button.

The GPS on the EVO blows away the GPS on the iPhone for accuracy and responsiveness. I experimented with the Google Maps app on each phone, walking up and down my street. Apple changed the GPS chip in this rev of the iPhone, going from an Infineon/GlobalLocate to a Broadcom/GlobalLocate. The EVO’s GPS is built-in to the Qualcomm transceiver chip. The superior performance may be a side effect of assistance from the CDMA radio network.

Incidentally, the GPS test revealed that the screens are equally horrible under bright sunshine.

The iPhone is smaller and thinner, though the smallness is partly a function of the smaller screen size.

The EVO has better WAN speed, thanks to the Clearwire WiMax network, but my data-heavy usage is mainly over Wi-Fi in my home, so that’s not a huge concern for me.

Battery life is an issue. I haven’t done proper tests, but I have noticed that the EVO seems to need charging more often than the iPhone.

Shutter lag is a major concern for me. On almost all digital cameras and phones I end up taking many photos of my shoes as I put the camera back in my pocket after pressing the shutter button and assuming the photo got taken at that time rather than half a second later. I just can’t get into the habit of standing still and waiting for a while after pressing the shutter button. The iPhone and the EVO are about even on this score, both sometimes taking an inordinately long time to respond to the shutter – presumably auto-focusing. The pictures taken with the iPhone and the EVO look very different; the iPhone camera has a wider angle, but the picture quality of each is adequate for snapshots. On balance the iPhone photos appeal to my eye more than the EVO ones.

For me the antenna issue is significant. After dropping several calls I stuck some black electrical tape over the corner of the phone which seems to have somewhat fixed it. Coverage inside my home in the middle of Dallas is horrible for both AT&T and Sprint.

The iPhone’s FM radio chip isn’t enabled, so I was pleased when I saw FM radio as a built-in app on the EVO, but disappointed when I fired it up and discovered that it needed a headset to be plugged in to act as an antenna. Modern FM chips should work with internal antennas. In any case, the killer app for FM radio is on the transmit side, so you can play music from your phone through your car stereo. Neither phone supports that yet.

So on the plus side, the EVO’s compelling advantage is the screen size. On the negative side, it is bulkier, the battery life is less, the software experience isn’t quite so polished.

The bottom line is that the iPhone is no longer in a class of its own. The Android iClones are respectable alternatives.

It was a tough decision, but I ended up sticking with the iPhone.